The HTTP part 2 - Connections Queue

Mutiple concurrent connections

What happens when someone asks for a website? Our server is constantly listening for an incoming connection, then it processes a request, prepares and writes a response, closes a connection and listens to another one. All of this work is done on one thread. What if there is another incoming request, but we are in the middle of preparing a response and not listening for an incoming connection? Is it queued? If so, where?

The backlog

Incoming connections are queued.

When we created a socket for listening connections we used a constructor ServerSocket(port) that uses another constructor.

public ServerSocket(int port) throws IOException {

this(port, 50, null);

}

/**

* @param backlog requested maximum length of the queue of incoming

* connections.

*/

public ServerSocket(int port, int backlog) throws IOException {

this(port, backlog, null);

}

There is a backlog parameter that is passed to the listen() system call underneath. The queue is not managed by our application but by an operating system.

Default value

Is 50 a good default value? As with every default value - you don’t know unless you measure it. Let’s say you are the only user of the server and you are not making concurrent requests, then maybe a smaller value would be just fine. Or maybe, you expect users to spam your server with thousands of requests? In this case, a higher value would be recommended. The point is that it’s good to know that there is such value and you can control it per your case.

How can we check the queue utilization?

I don’t belive there is an API in Java for this, but you can just run lsof to check open connections on given port.

$ lsof -i :8090

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 23785 jakub.dyszkiewicz 40u IPv6 0x8bf0b7d1c46b6bd3 0t0 TCP *:8090 (LISTEN)

java 23785 jakub.dyszkiewicz 44u IPv6 0x8bf0b7d1c46b99d3 0t0 TCP localhost:57990->localhost:8090 (ESTABLISHED)

java 23785 jakub.dyszkiewicz 45u IPv6 0x8bf0b7d1e55ef553 0t0 TCP localhost:8090->localhost:57990 (ESTABLISHED)

java 23785 jakub.dyszkiewicz 46u IPv6 0x8bf0b7d1e55ec753 0t0 TCP localhost:57991->localhost:8090 (ESTABLISHED)

Why can’t we just max out this value? What is the cost?

When a connection is queued, it is established first. Last time, we learned that every new connection is equal to a new file descriptor and there is a limit of open file descriptors in the operating system. The limit is set because every FD costs the memory. I’ve seen going as far as 10M open connections, but with 200GB memory machine! If we are drowning in pending requests, we should probably look at another way to solve this problem. Maybe we need to scale horizontally, or maybe we are in the middle of the DDoS.

What’s the default in other servers?

I was having trouble to find this, but to be honest - it doesn’t matter that much. Most popular servers are multithreaded, in this model, connections are accepted as fast as possible and delegated to other threads so the backlog won’t be a bottleneck.

Dropping connections

Right now our server can hold 50 connections in the queue. Let’s parametrise it and change it to 1 and see if the third connection is dropped.

class Server(

val port: Int,

val maxIncomingConnections: Int = 1): AutoCloseable {

private val serverSocket = ServerSocket(port, maxIncomingConnections)

...

private fun handleConnection(socket: Socket) {

val input = socket.getInputStream().bufferedReader()

val output = PrintWriter(socket.getOutputStream())

val requestLine = input.readLine()

logger.info("Received request: {}", requestLine)

Thread.sleep(5000) // sleeping for 5s before writing response

output.print("""

HTTP/1.1 200 OK

Hello, World!""".trimIndent())

output.flush()

}

...

}

Now we should be able to fire 3 requests concurrently and see a problem. Let’s test it!

@Test

fun `server should refuse to accept more connections that maxIncommingConnections`() {

// given

val server = Server(port = 8090, maxIncomingConnections = 1)

// when

val completedRequest = LongAdder() // we will be adding from multiple threads

// we are starting 3 requests simultaneously

val clientsThreadPool = Executors.newFixedThreadPool(3)

repeat(times = 3) {

clientsThreadPool.submit {

try {

val connection = URL("http://localhost:8090/").openConnection() as HttpURLConnection

connection.connect()

if (connection.responseCode == 200) {

completedRequest.increment()

}

connection.disconnect()

} catch (e: Exception) {

e.printStackTrace()

throw e

}

}

Thread.sleep(500)

}

// it should be enough for 3 request to complete if limiting was somehow broken

clientsThreadPool.awaitTermination(20, TimeUnit.SECONDS)

// then

assertThat(completedRequest.sum()).isEqualTo(2)

// cleanup

server.close()

}

And it fails!

org.junit.ComparisonFailure:

Expected :2L

Actual :3L

3 requests were completed. Wait, but the third connection should be dropped! What’s going on? To debug what happened we can trace what packets were sent, but first…

Quick basics of TCP

TCP is a protocol on the Transport Layer in the 7 layers of OSI. It lets you not to worry about things like missing packets or invalid order of packets. If your packet is not delivered, it will be retransmitted. In order to send something over TCP, you have to first establish a connection. To do that:

- Client sends an

SYNsegment to request a connection - Server answers with an

SYN-ACKmeaning that it also request a connection and acknowledge the client’s request - Client sends an

ACKsegment to acknowledge servers’ request

The connection is now established, this is when a socket is created. Both parties can now send data over the connection. Standard HTTP flow this would look like this:

- Client sends a segment with a request

- Server sends an

ACKsegment confirming that it got the data - Server now sends a segment with a response

- Client sends an

ACKsegment confirming that it got the data

At some point, we want to close the connection (immediately after data exchange in our case - at least for now). To do that:

- Server sends a

FIN,ACKsegment to signal that it’s closing the connection - Client sends an

ACKthat it gets that information - Now Client sends a

FIN,ACKto signal that it’s closing the connection - And Server responds with an

ACK, the connection is now closed

There is also RST segment - reset the connection. It is sent when something will go wrong like the client sending data packages after a connection is closed.

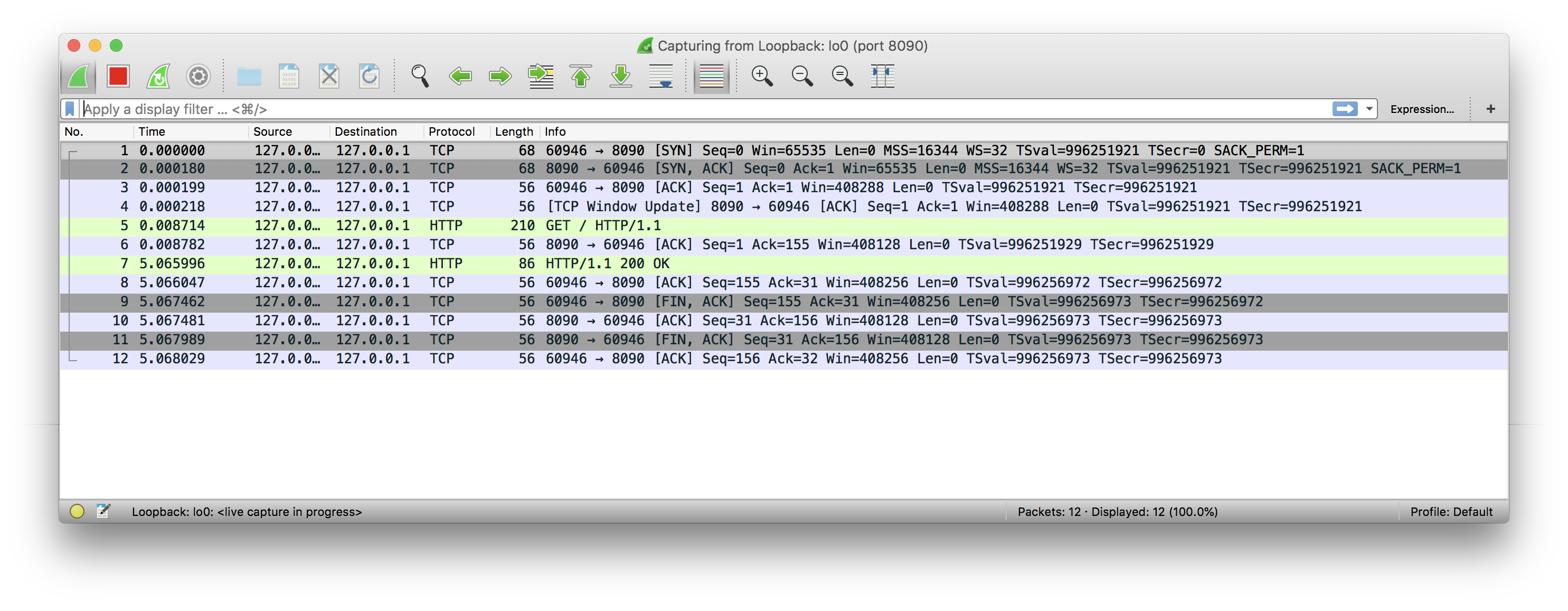

Let’s see that in action in Wireshark - a tool for network monitoring. We are going to monitor just one request for now

60328 is the port of the client and 8090 is the port of the server.

From 1 to 3 are packets responsible for the connection establishment.

The 4th packet is a TCP Window Update, which tells a client how much more bytes the server can yet receive before it’s full.

5 and 6 are a request to the server.

7 and 8 are a response to the client.

From 9 to 12 we can see sequence for closing the connection from both sides

Back to 3 requests

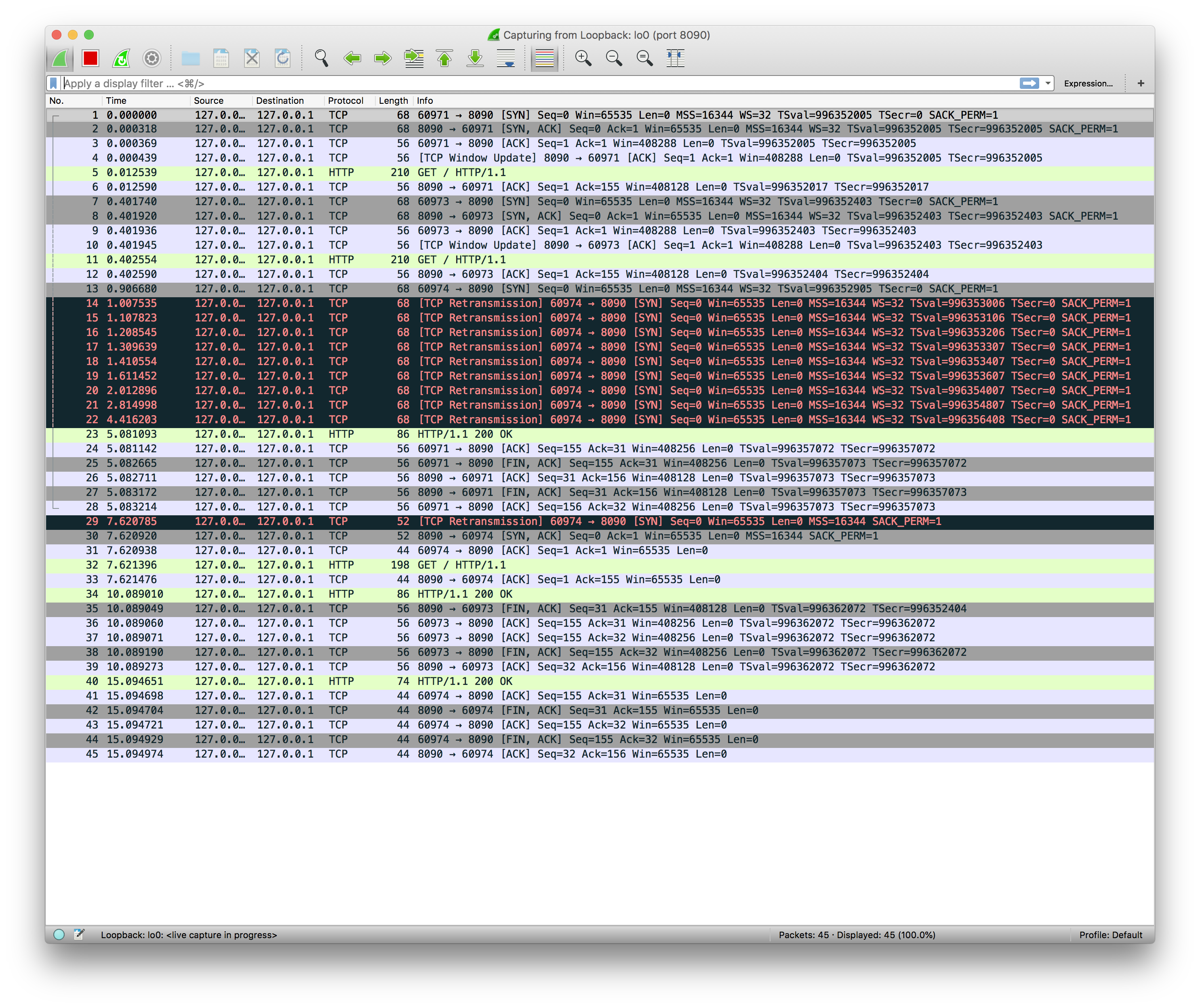

Ok, once we get comfortable with using Wireshark, we can run it for 3 requests.

Oh, that’s right! When the client sends the third connection request, the server does not respond with RST or FIN, it just ignores this package. The client did not receive SYN-ACK after its SYN segment, so it assumes that the package is lost which results in firing standard TCP retransmission. That’s actually great! If the server had returned RST then we would have to implement retry logic on the client side, but now Operating System is doing it for us.

We can also look on listen() system call documentation

The backlog argument defines the maximum length to which the queue of pending connections for sockfd may grow. If a connection request arrives when the queue is full, the client may receive an error with an indication of ECONNREFUSED or, if the underlying protocol supports retransmission, the request may be ignored so that a later reattempt at connection succeeds.

Which confirms the behaviour we observed.

Is it ok to spam retransmission packets and wait for connection forever?

You can see that even in our case retransmission is run every 100ms, but then backs of to about 3s between last retransmissions (look at time column on the last screenshot), so our server is not drowning with useless packets.

However, waiting forever is a problem. Let’s say we’ve got distributed system of Orders and Users. A user request an Orders for buying an offer, then Orders asks Users if the user is not banned. If Users is under heavy load. and can’t accept connections, Orders blocks and waits forever. Now the connections in Orders are blocked so it cannot accept anymore and the whole platform is essentially dead. That’s why after some period of time (or just period?), we should assume that server won’t respond. This is called a connection timeout and you should always set it in your HTTP Client. I will cover more when we will start building our HTTP Client.

Test fix

Let’s add connection timeout to our client

...

clientsThreadPool.submit {

try {

val connection = URL(“http://localhost:8090/“).openConnection() as HttpURLConnection

connection.connectTimeout = 1000

connection.connect()

...

And now the test passes! We can see java.net.SocketTimeoutException: connect timed out on output.

Summary

We learned how our single-threaded server handles multiple connections. We learned the basic flow of TCP and we used Wireshark which is extremely helpful for debugging server and client behaviour. We actually didn’t improve anything in our server, we only learned deeper how it works already. Next up, we’re going to build some abstractions. Our server will be able to respond with something else than Hello, World! and we will get rid of hardcoded Thread.sleep That will help us to test server behaviour in the next scenarios.

As always, code samples are available on my github.

Other articles of the series

This article is a part of the HTTP series